World Agri-Tech Panel Discusses Generative AI with Balanced Optimism

- Agriculture industry use cases for AI include crop protection, biological discovery, and enhancing the work of agricultural advisors.

- Still, there’s work to be done in addressing bias, data privacy, and explainability in order to build trust among consumers.

At a recent panel at World Agri-Tech Innovation Summit 2024, Ranveer Chandra led four experts in a conversation about the state of generative AI in agricultural technology. Representing AI technology providers and innovators building AI-powered agtech solutions, the panelists shared the most exciting current and near-future industry use cases for AI, and their approaches to AI ethics questions.

The panel comprised:

- Moderator: Ranveer Chandra, Managing Director, Research for Industry & CTO, Agri-Food, Microsoft

- Jeremy Williams, Head of Climate LLC, Digital Farming and Commercial Ecosystems, Bayer Crop Science

- Elizabeth Fastiggi, Head of Worldwide Business Development, Agriculture, AWS

- Feroz Sheikh, Group Chief Information and Digital Officer, at Syngenta

- Elliott Grant, CEO, Mineral

While the conversation took an enthusiastic tone toward the value GenAI (and AI more broadly) can provide to agronomists, advisors, and individual farmers, it was also honest about the challenges that lie ahead.

A Revolution Still in its Infancy

Chandra opened the panel saying that AI represents the fourth major technological shift in the past forty years, following the dawn of the personal computer, the World Wide Web, and the proliferation of mobile tech and the cloud.

The Generative AI Era is in its infancy, having only kicked off in earnest about 18 months ago. Still, Chandra said, AI’s two unique capabilities (to help computers understand the human user, and to help us make meaning from data) hold great promise for agriculture.

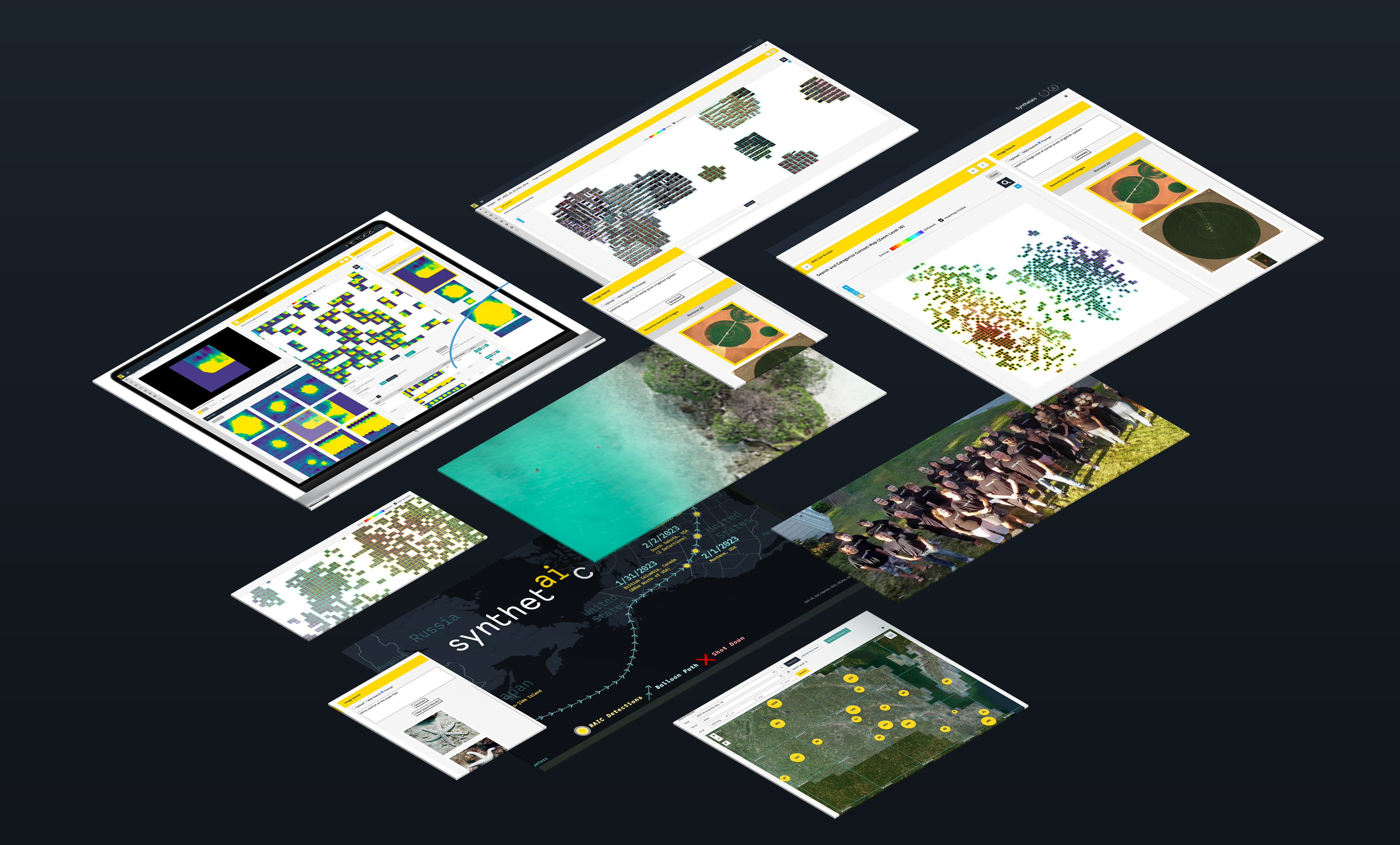

Early in the panel, Elliot Grant (CEO, Mineral) made an important caveat: “There’s a tendency to call everything Gen AI because it’s... fashionable. … AI is used to understand and analyze data. And GenAI is different because it actually creates new content.” Indeed, the conversation that unfolded encompassed both generative AI, such as large language models (LLMs), and other AI, like the powerful, data-type-agnostic vector search in RAIC.

What AI Can Do for Agriculture

All four companies represented by the panelists have formed their unique approaches to AI and started putting it to use in the field (pun intended).

Bayer Crop Science has trained recommendation engines to enhance the work of agricultural advisors and is actively exploring AI’s predictive potential for use cases including accelerating breeding efficiency.

For Syngenta, AI is a tool to “turbocharge” innovation and the research and development process. AI has already demonstrated its potential for accelerating biological discovery. Sheikh anticipates that, as LLMs can make engaging with large amounts of data feel more natural than ever, adoption of new GenAI technology is likely to be rapid.

Amazon Web Services invests in and builds AI across the lifecycle, from the infrastructure used to build foundation models to applications built on those models. Referring to a new partnership that will drive portfolio-wide notification and management of early warning signs of change, Fastiggi said, “We’re automating a task that would have normally required a visit to the farm.”

Mineral builds plant perception technology for crop protection, yield forecasting, supply chain management, and equipment automation.

Taken together, the panelists’ explanation of how companies’ strategic approaches to GenAI painted an exciting picture of the future of agtech.

Facing the Risks and Challenges

Moving the conversation to challenges and opportunities, Chandra said: “AI is [only] as good as the data it’s trained on.” He added that we’ve all seen AI “make mistakes,” and that hallucinations are only the tip of the iceberg of potential dangers. Bias and misuse, he said, pose major ethics questions for AI.

We have an obligation to the industry and to farmers and all the users that they can trust it. And I do think we’ve got a bit of a gap to close before we get there.

Elliott Grant, CEO, Mineral

Grant put it plainly: “We have an obligation to the industry and to farmers and all the users that they can trust it. And I do think we’ve got a bit of a gap to close before we get there.” He described the use of “gold standard models,” against which new models are evaluated, as an exemplar for other industries to follow.

A key barrier to trust, Grant said, is explainability: “AI models are famously black-box.” Chain-of-Thought prompting, a process by which LLMs are made to “show their work,” is one route to being able to explain how a generative AI application is producing its result.

Another barrier is bias. AI models can only understand that which they’ve been exposed to, so AI trained on data from a large-scale corn farm won’t be of much use to a smallholder farmer of plantains. Because many smallholder farmers across the world are women, Fastiggi said, geographically-biased models hold an inherent aspect of gender bias, too. “It’s incumbent upon all of us to think about the biases that we already have in this industry,” she said. “Think consciously and critically about them, and think about how we avoid perpetuating them in how these foundation models are trained.”

An additional ethical point, raised by Williams, is data privacy: “Customers need to be confident that their data is being used in ways they’d permit.” It’s not enough, he said, for AI built on customer data to have internal, operational value; there also needs to be a value proposition to the customer. He also offered a litmus test for customer satisfaction with AI models: asking “Would you be willing to recommend this model to one of your trusted customers?” he explained, led to more incisive feedback from customers than a simple “Are you satisfied?”

Sheikh said that developing open data sets and open standards, allowing systems to talk to each other, would be a highly beneficial form of precompetitive work for the industry to undertake.

After all, as multiple panelists explained, we all stand to benefit from earning and establishing trust in AI technology.

Dreaming Big: The Next Five Years

As the panel wrapped up, Chandra invited panelists to imagine the next five years of innovation and name the emerging technologies they’re most excited about.

Of course, it’s impossible to know the future — even for the most advanced AI built to date. Williams suggested that the ceiling for AI is impossibly high, especially if the technology is democratized. “If we successfully lower the barrier for entry, this thing will go in directions that we can’t even imagine today.”

Grant said he was most excited about the opportunity to make a “paradigmatic shift” to continuous model retraining, which “unlocks the ability to do continuous monitoring and management.” In combination with embodied AI (“where the AI is actually engaging with the real world and building a world model continuously”), we could see what he spontaneously coined “ReGenAI.”

Challenged to name their boldest predictions for the next five years of AI, the panel was enthusiastic about Grant’s suggestion of an LLM that understands the human genome, while Fastiggi envisions models that can predict future weather events in the face of climate change.

It’s an exciting moment in the history of AI. While it’s impossible to know what the future holds, it’s clear that the high rate of innovation will be both reflected in and driven by the agricultural industry.